Preface

In big data scenarios, it’s very common to load large amounts of data into programs for processing. For users familiar with Pandas, using a for-loop to continuously add new data to an existing DataFrame is a common approach. Although reading all files sequentially with a for-loop works, the process can become time-consuming as the data volume grows. This is where Dask shines. Fully compatible with Numpy and Pandas, Dask provides parallel data loading and processing capabilities. This article introduces how to use Dask to simplify the loading of large numbers of CSV files.

Review of Pandas

Before introducing Dask’s solution, let’s review how to read large datasets using Pandas. Assume there are hundreds of CSV files in the data directory with filenames like:

| |

Using Pandas, the process to read these files sequentially would be:

| |

In the code above, we use glob to get all CSV filenames in the data directory and sorted to sort them. We create an empty DataFrame before reading. Then, a for-loop reads each CSV file sequentially and appends it to the DataFrame. While straightforward, this method has a few issues:

- We need to create an empty DataFrame before using

concatto append data. - When the number of files is very large, the for-loop takes a lot of time to read the data.

Of course, we could avoid creating an empty DataFrame by using if with enumerate to handle the first file differently, but that would make the process slower due to additional if...else checks.

Introduction to Dask

Dask is a Python library that provides parallel and distributed computing capabilities. Its core design uses lazy evaluation, delaying computation until .compute() is called, which reduces workload and improves efficiency. With Dask, code isn’t executed immediately; instead, operations are scheduled and only computed when needed. This helps reduce memory usage and enables parallel processing. For example, Dask can parallelize the process of reading all CSV files into memory before combining them. Alternatively, we can modify the workflow to process each file as it’s read and combine the results later.

How to Quickly Load Large Datasets with Dask

Here’s a simple example demonstrating how to load data using Dask:

| |

As you can see, using Dask to read data is straightforward. Simply pass the list of file paths to the read_csv function and call .compute() to load all data. Dask.dataframe is largely compatible with Pandas, so you can define a series of operations before calling .compute().

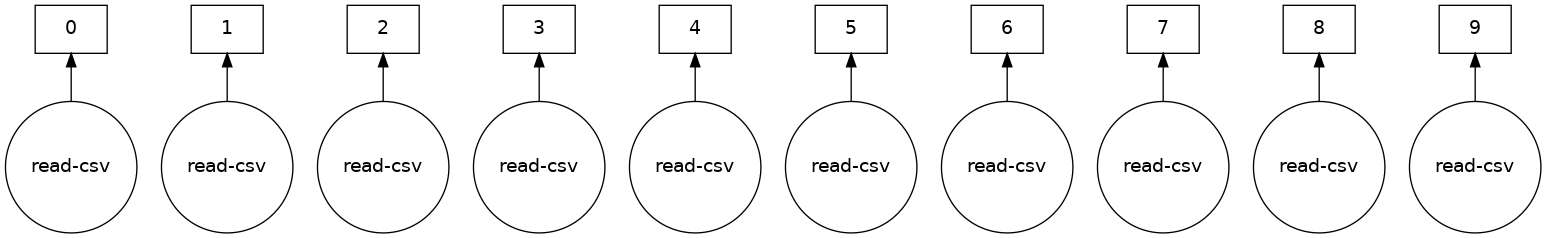

Visualizing Dask’s Processing Flow

Dask provides a visualization feature (.visualize()) that helps users understand how computations are executed within Dask. To use it, you need to install graphviz. We can visualize the previous example to see how Dask processes the files (for better readability, we’ll only load ten files):

| |

Dask will return an image showing the computation graph.

Note that only objects that haven’t been computed with .compute() can use .visualize() to display the computation flow.

Key Takeaways

In this article, we introduced the powerful parallel data processing library Dask. By using Dask, we can:

- Simplify and speed up data loading

- Accelerate data reading and processing through parallelism

- Reduce computation via lazy evaluation

When using Dask, remember that no computation occurs until .compute() is called.